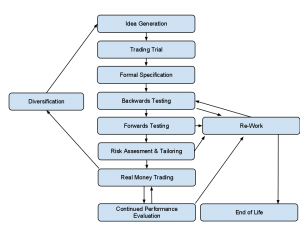

I realized this morning that it’s been almost an entire year since I wrote about the trading system development process pictured at right. That’s almost criminal, because in all that time I’ve never really gotten around to explaining the heart of the system development process: testing systems to make sure they work, and refining them when they don’t. This corresponds to the formal specification, backwards/forwards testing & re-work boxes in the diagram.

I realized this morning that it’s been almost an entire year since I wrote about the trading system development process pictured at right. That’s almost criminal, because in all that time I’ve never really gotten around to explaining the heart of the system development process: testing systems to make sure they work, and refining them when they don’t. This corresponds to the formal specification, backwards/forwards testing & re-work boxes in the diagram.

These steps are in some sense the most dangerous parts of system development process because it’s easy to fool yourself into thinking a system works when it doesn’t. In part this is because it’s nice to be optimistic and think you have a working system even when the evidence doesn’t say so. In other words there’s a psychological bias involved. But the problem is made worse by deficiencies in the probability & statistics tools typically used for dealing with uncertainty. So even if you think you know how to do statistical confidence testing and so forth, what you know is probably more dangerous than useful. This article will try to get you pointed in the correct direction with a little help from some old friends: the infinite monkeys. If you haven’t read about them yet, I suggest you do so now. let’s set up the problem:

Imagine you have a large group of monkeys who trade. Some of the monkeys are skilled monkeys – simian geniuses whose trades are much better than random. The rest of the monkeys are random monkeys – they just press buy or sell however their banana fueled fancies dictate. Your job is to look at the track record of these monkeys and determine which ones you want trading your money. How do you do it?

Hopefully you agree that this is simply the process of trading system testing with the trading systems represented as monkeys. Some systems/monkeys are good. Some aren’t. Your job is to tell the two apart.

First lets look at the completely wrong way to solve the problem. You take the trading record of one monkey and ask how likely it is that a random monkey would do worse. In the case of binary win/loss trades, you could do this by integrating the binomial distribution. Say the monkey had 21 winning trades and 9 losing trades (30 total), with all wins/losses being the same size. You would then integrate the binomial distribution from zero to (wins -1) with P=0.5. That’s the probability a random monkey would do worse. In Excel/OpenOffice/Google Docs the formula for this would be:

=BINOMDIST(WINS-1,WINS+LOSSES,P,TRUE)

where the “TRUE” tells BINOMDIST() to integrate rather than giving you just one point. In our example . The resulting number is typically referred to as a “confidence level” or similar. In this case (21 wins/9 losses), it would be about 0.979 or 97.9%. While there’s no specific standard, 95% plus confidence is typically used as a cutoff for believing something. So in this case we would deem our monkey skilled and let him manage our money. This is the basic logic behind almost all confidence testing in the sciences, although different distributions are used in different cases.

It’s also totally wrong.

To understand why it’s wrong, we need to look at the whole population of monkeys, not just one in a vacuum. Let’s say our population consists of 500 random monkeys and 1 skilled monkey. Let’s just assume for sake of argument that the skilled monkey will always have at least 21 winning trades out of 30. So if we apply this same test to all 501 monkeys and keep those that get at least 21 winning trades out of 30, we keep the skilled monkey. But we would also expect to keep about 10.7 random monkeys on average (math: (1 – 97.9%) * 500). So only one in 12 of the test passing monkeys (probability 0.085 or 8.5%) is actually skilled. Something’s wrong – our confidence and the real probabilities bear no resemblance to each other. We’re 97.9% confident that a monkey with 21 out of 30 wins is skilled, but in reality only 8.5% of the monkeys that pass that test are. What the hell happened?

What happened was the revenge of Thomas Bayes, an 18th century English mathematician. Bayes developed the mathematics for reasoning with evidence in the face of uncertainty. That sounds like a mouthful, but it’s really not. In this case, the uncertainty is whether a monkey is skilled or random. We have a prior belief about that (1 in 501 monkeys is skilled). We have some evidence (a monkey had 21 of 30 winning trades) and we want to update our prior belief to reflect the new evidence. By way of Bayes Theorem (really, the odds version of Bayes rule which has cleaner algebra) we determined that the new belief is that 1 in 12 monkeys are skilled.

Bayes Theorem is a solidly proven mathematical fact. Strangely most of science and mathematics disputed it, or at least disputed its importance, until late in the 20th century. I don’t have a good enough grasp of the history of mathematics to understand why that was, except perhaps to say that even smart people can be stupid some times. But the result of this historical error was that most of probability and statistics evolved with a non-Bayesian view, instead substituting confidence testing. The difference between the two is that confidence testing doesn’t take prior beliefs into account (in this case it only looks at one monkey) but Baysian inference does consider prior information (it looks at all the monkeys). One might wonder how such an error in thinking could go unnoticed for so long and indeed persist in essentially all prob/stat texts to this day. But it’s really not that hard – when the prior probabilities are close to 50/50, your confidence score and the true probability are almost the same. As a result, confidence testing appeared to work pretty well most of the time and thus became accepted practice. The place where confidence testing breaks down is in situations where the hypothesis you are testing is a priori extremely unlikely. That’s almost unheard of in the sciences (where you test hypothesis you think are right) but exactly the situation that arises in trading where you’re trying to separate a few working strategies from many more non-working ones.

This is not really related to trading, but misusing confidence intervals is now quietly destroying the accuracy of even the hardest of sciences. It used to be that most of the hypothesis being tested were common sense explanations for simple phenomenon. As a result, they were almost always true. That meant that confidence testing was if anything on the conservative side and the “knowledge” it rubber stamped was almost always true. But now we’re in the opposite situation – most of the explanations being tested are extremely complex explanations of murky, hard to measure phenomenon. As a result, we should be assuming a priori that most of these theories are wrong. In that situation, confidence testing is wildly optimistic. So optimistic in fact that I wouldn’t be surprised to learn that more than half of results tested to 95% confidence in scientific papers are in fact in error. If I could pick one thing I’ve written on this blog to go viral, one intellectual contribution I could make to mathematics and science, it would be the recognition that we are now shooting ourselves in the foot with the very statistical tool that once benefited us.

I’ve gotten as far as laying out the intellectual framework for testing trading strategies, but I haven’t actually explained how to do it yet. Part 2 will provide step wise instructions and address some additional practical concerns. Stay tuned.

This is wrong. If there is a 0.3% chance that a random monkey will have 23 winning trades and there are 500 random monkeys, that would mean there are 1.5 random monkeys that look skilled. That gives a 40% chance that a trading record matching this level of confidence is based on skill, not 7%.

Good catch – there was a typo that propagated through the post. I’ve fixed it, and I believe the new values are correct and illustrates the point.

Part 2 coming soon?